Why My LLM Can’t Count the “R”s in Strawberry?

Published on April 11, 2025 · AI LLMs Limitations Tokenization

TL;DR: Your LLM can’t reliably count the r’s in strawberry because its entire pipeline—from tokenization to vectorization to attention—optimizes for meaning over mechanics. Counting is a symbolic operation that requires character access and an algorithm; standard LLMs have neither by default. Give them character-level visibility or a small tool to do the job, and the problem disappears.

Large language models can explain relativity, crack jokes, help you draft a grant proposal, and even write a haiku about your cat. But then you ask:

“How many r’s are in strawberry?”

…and suddenly the mighty machine collapses.

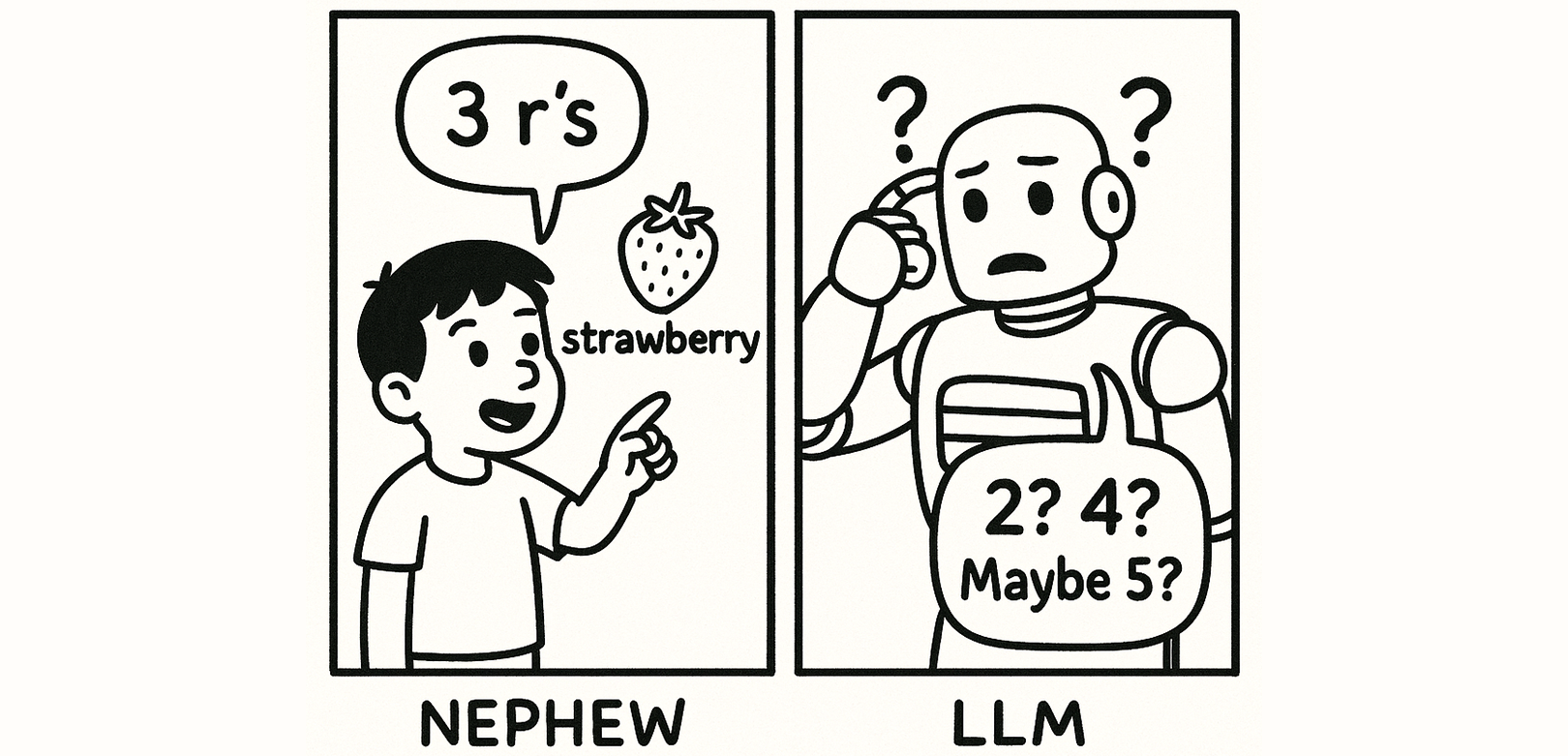

This isn’t just funny, it’s deeply revealing. My 6-year-old nephew nails this instantly, yet the same model that can reason about quantum entanglement flubs a 10-letter spelling puzzle. Why? Let’s understand why:

1. Tokenization Fractures Words Into Chunks

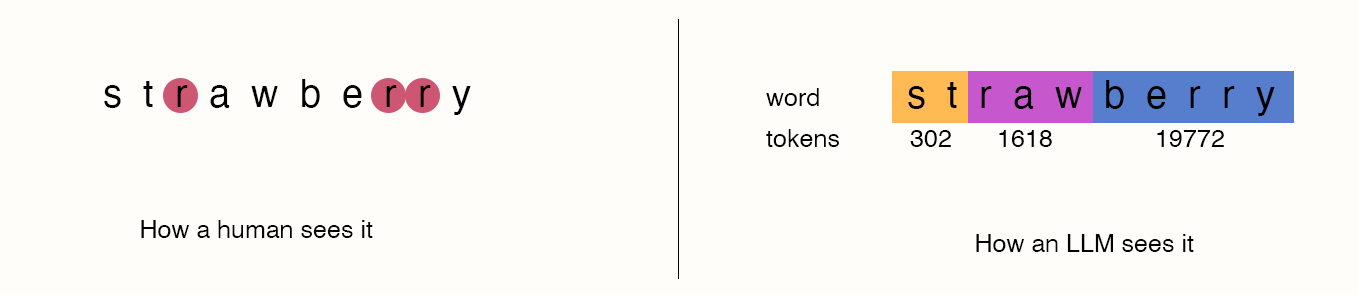

Tokenization is how an LLM takes human text and turns it into math. Instead of seeing “s–t–r–a–w–b–e–r–r–y,” the model might see:

["straw", "berry"]["st", "raw", "berry"]converting it into numbers like[302, 1618, 19772]

My nephew sees 10 letters. The LLM sees a handful of chunks.

Here’s the kicker: tokenization is context-dependent. The standalone word strawberry might split one way, but in the sentence “How many r’s are in strawberry?” it could be a single token. To the model, strawberry might look like just one indivisible token.

See how ChatGPT tokenizes input using OpenAI Tokenizer .

2. LLMs are stochastic autoregressors

All current chatbots are autoregressors: they predict the next token based on probabilities from training. That means:

- They don’t “calculate.”

- They don’t “scan.”

- They guess the next likely thing.

Think about it: when was the last time you, or anyone, wrote “strawberry has 3 r’s” on the internet? Basically never. So the model doesn’t have this tucked in memory. Instead, it reaches into its bag of probabilities and blurts out something plausible, like “two” or “four.”

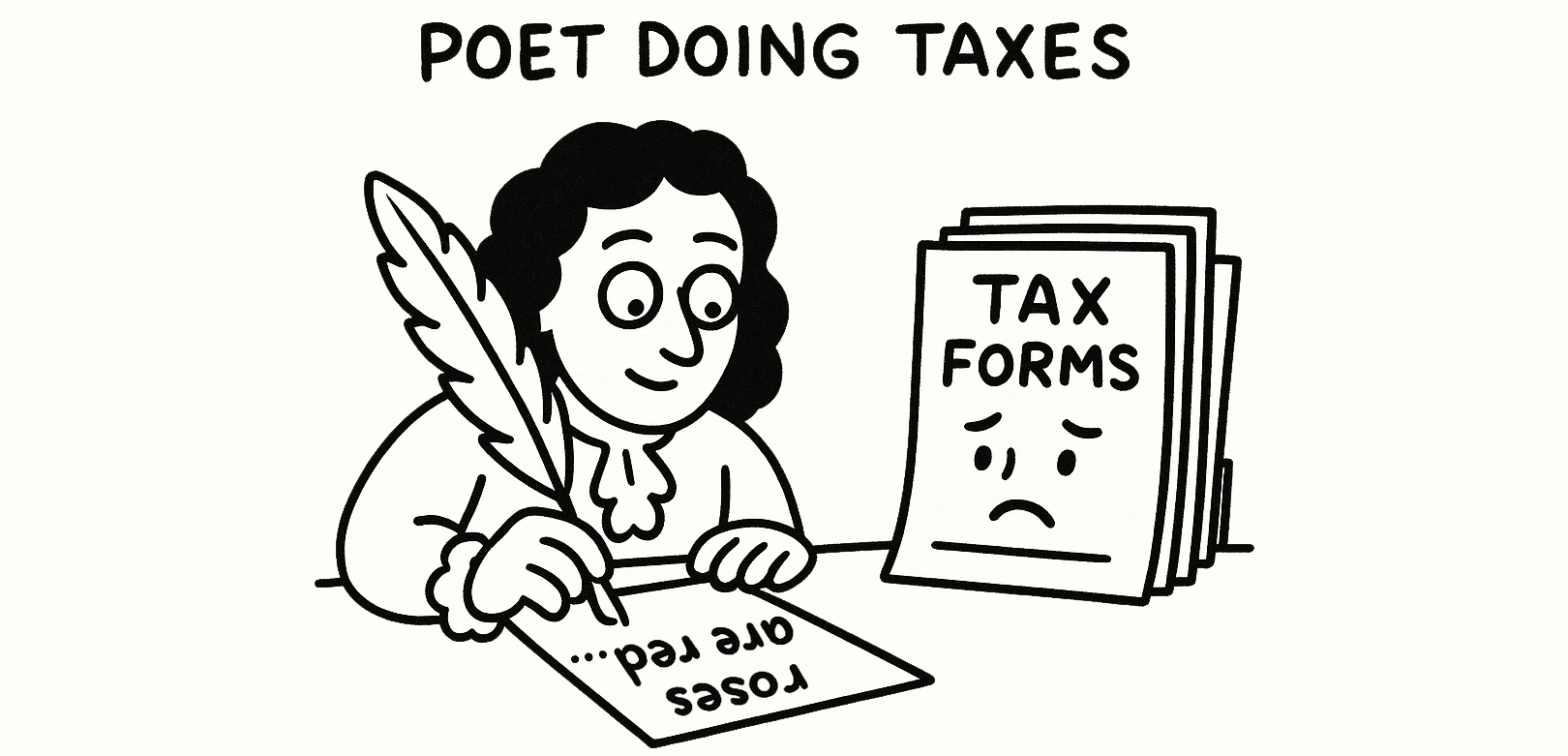

It’s like asking a poet to do your taxes: they’ll give you something plausible and be confident about it, but you probably shouldn’t file it with the IRS.

How LLMs “See” Text (A Bit Deeper)

- Tokens → Vectors

Each token becomes a high-dimensional vector that captures distributional properties. These vectors are ideal for meaning, analogy, and context, but not for recovering exact character strings. - Attention runs over tokens, not letters

Self-attention relates tokens to other tokens. Positional encodings tell the model where tokens sit, but positions refer to tokens—not characters. - Why “just learn counting” is hard

Counting requires a discrete, stepwise algorithm (scan → accumulate → compare). Transformers can emulate such routines with supervision, but it’s brittle.

How to Get the Right Answer (Reliable Workarounds)

1) Force character-level visibility in the prompt

- Space out the letters: “Count the ‘r’s in

s t r a w b e r r y.” - Use separators: “Count the ‘r’s in

s|t|r|a|w|b|e|r|r|y.”

2) Ask the model to spell first, then count

- “Spell strawberry with spaces between each letter.”

- “Now count the number of ‘r’ characters you just wrote.”

3) Use a tool for deterministic counting

word = "strawberry"

sum(c.lower() == "r" for c in word)4) Finetune or choose character-aware models

If letter-precise tasks are central, use character/byte-level tokenization or finetune on character-level objectives. Still, deterministic fallbacks are best.

Quick Reference: Prompt Patterns That Work

- Simple spacing: “Write strawberry as individual letters separated by spaces, then count the number of ‘r’s.”

- Verification step: “Show the letters you are counting, then give the count as a final number.”

- Constrained output: “Return JSON with fields

letters(array of characters) andcount_r(integer).”

Practical Takeaways

- Expectation setting: LLMs are phenomenal semantic engines, not symbol counters.

- Design principle: When you need exactness, offload to a tool or force character-level view.

- Robustness over cleverness: A one-line helper function is more reliable than prompt wizardry.

Final Word

The strawberry test is funny, but it’s also profound. It reminds us that LLMs are masters of meaning, not mechanics. They’ll wax lyrical about strawberries and their antioxidant properties, but ask them to count r’s and you’ve got a confused poet fumbling with a spelling quiz.

So when precision matters? Don’t leave it to the poet. Hand them a calculator. Or better yet, let your six-year-old nephew check the spelling. He won’t miss.